Agent migration

EKS Intelligence has deprecated the support for the OpenTelemetry agent and replaced it with the unified Kubernetes Intelligence agent.

This page explains how to migrate to the Kubernetes Intelligence agent if you've deployed the OpenTelemetry Collector agent on your clusters. If you're new to EKS Intelligence, follow the Kubernetes Intelligence onboarding instructions to install the agent. If you've already set up Kubernetes Intelligence, you can start using EKS Intelligence straightaway if it is included in your DoiT Cloud Intelligence plan.

Migrate to Kubernetes Intelligence agent

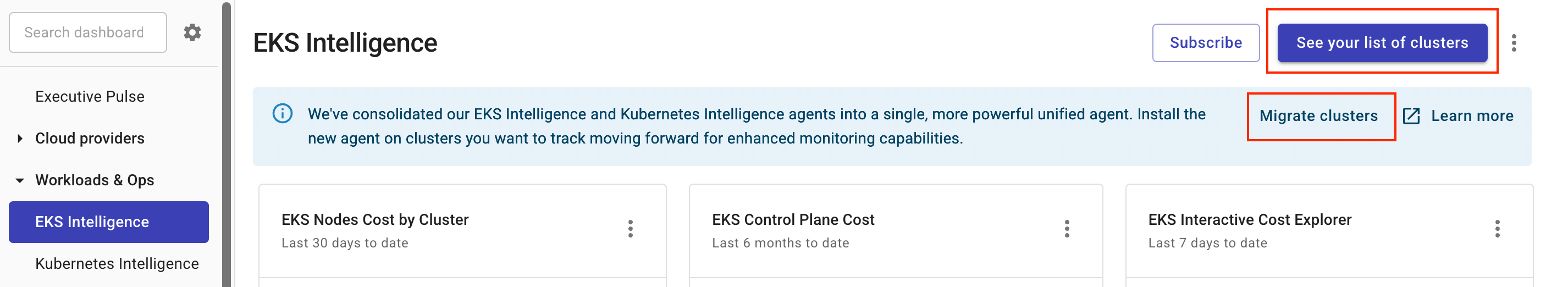

To migrate to the Kubernetes Intelligence agent, sign in to the DoiT console, navigate to the EKS Intelligence dashboard, and then select See your list of clusters or Migrate clusters.

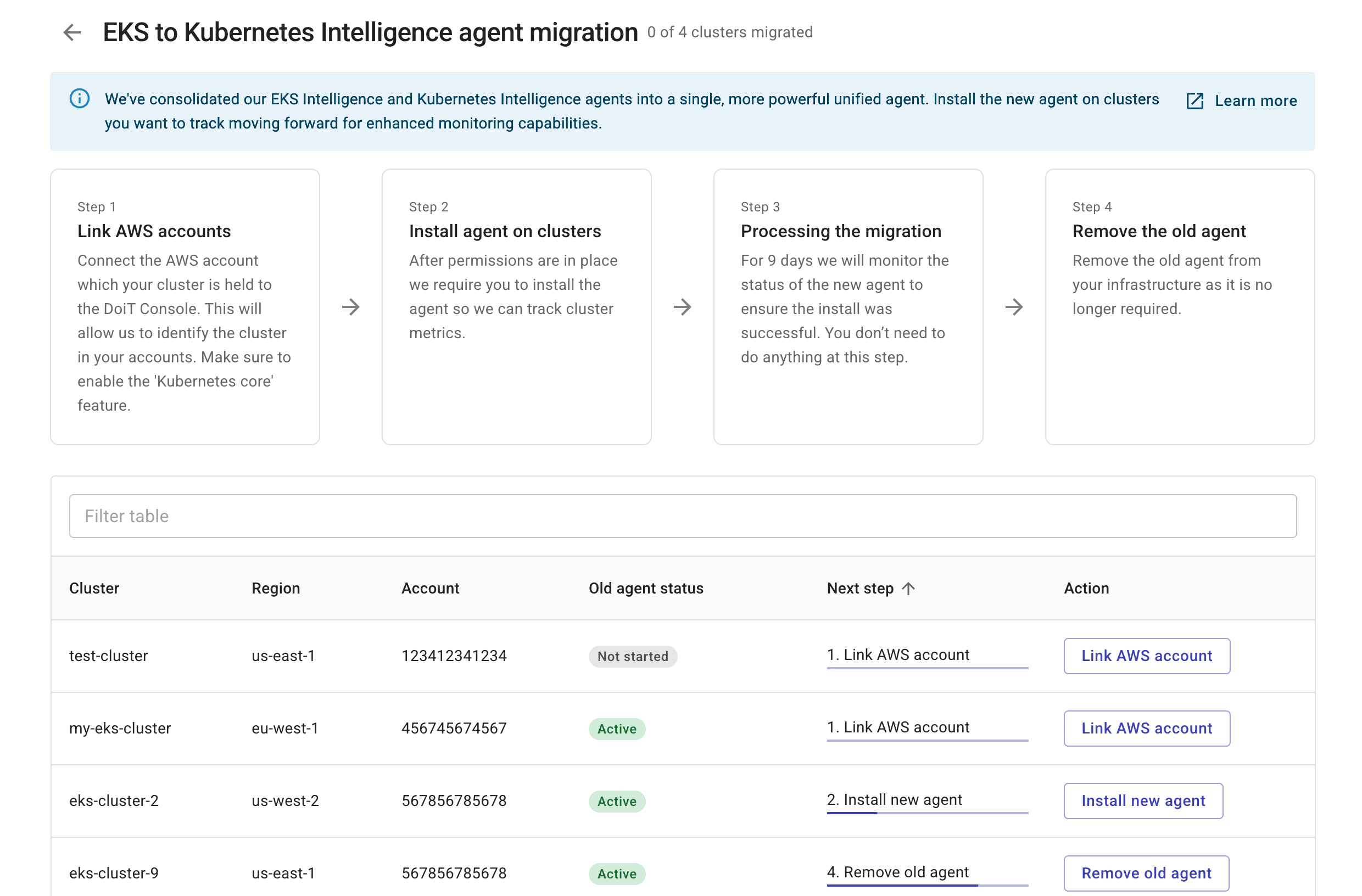

You'll be directed to the EKS agent migration page, where you can check the overall status and follow the step-by-step instructions to complete the migration.

Step 1: Link AWS account

In this step, you link your AWS accounts and enable the Kubernetes core feature.

DoiT performs a daily scan of the connected AWS accounts (midnight UTC) in your cloud environment. When there are clusters found in your accounts, you can proceed to the next step.

Step 2: Install agent on clusters

This step is to be performed per cluster. See Insall agent via Helm chart.

Step 3: Processing the migration

Once the new agent is installed, the cluster enters a 9-day observation period during which we monitor the new agent to ensure that it works properly.

-

You cannot speed up or stop the process before the observation period ends.

-

You should not remove the old agent during the observation period.

-

You can continue using EKS Intelligence dashboard or analyzing EKS costs in Cloud Analytics reports as usual. The migration process doesn't affect the EKS data per se.

Step 4: Remove old agent

This step needs to be performed per cluster. Select Remove old agent to get instructions based on the way the old agent was installed.

- Terraform

- CloudFormation with Helm

- CloudFormation with kubectl

Run the terraform destroy command to destroy the full stack based on your CLUSTERNAME.tf file.

You can also use the -target option to destroy a single resource. For example, terraform destroy -target RESOURCE_TYPE.NAME.

-

Delete the CloudFormation stack of the cluster from your AWS account. See Deleting a stack.

-

Run the

helm uninstall doit-eks-lenscommand to delete the agent (OpenTelemetry Collector) from Kubernetes.

-

Delete the CloudFormation stack of the cluster from your AWS account. See Deleting a stack.

-

Run the

kubectl delete -f DEPLOYMENT_YAML_FILEcommand from the AWS CloudShell to delete the agent (OpenTelemetry Collector) configuration.

Select the I confirm I have uninstalled the old agent checkbox and then select Done to finish the migration process.

FAQ

Is there downtime during the migration?

No. The migration is a zero-downtime process.